Robotics

Our work concerns vision and, more generally, on-board data processing for autonomous navigation and environment modeling by one or more robots. They are largely in line with the Copernic Lab, which brings together experimental and methodological resources at ONERA Palaiseau for autonomous robotics.

Visual Localization

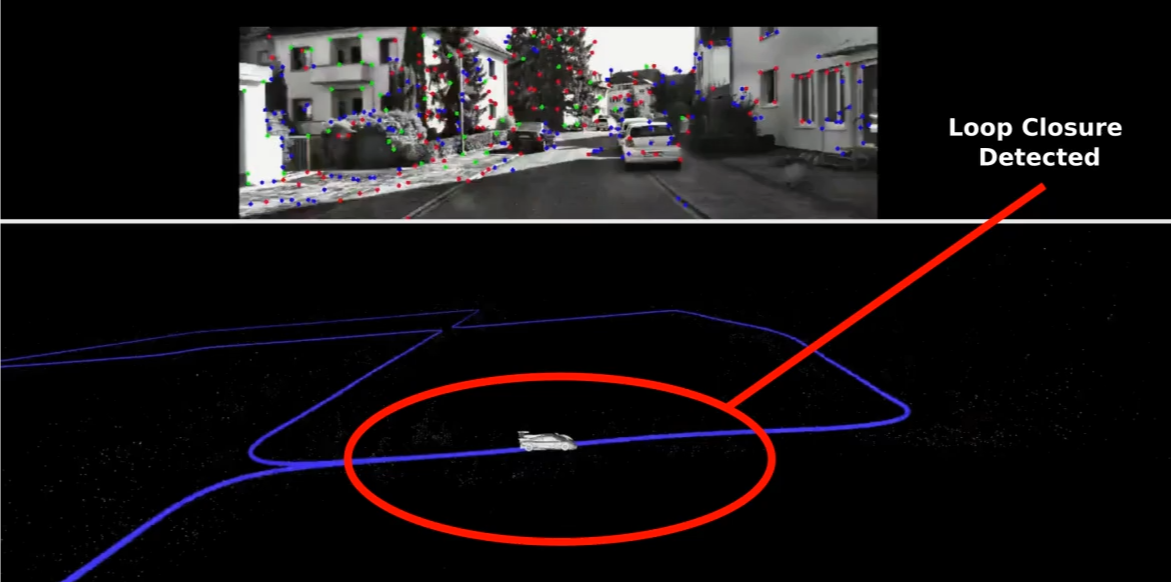

OV2SLAM: Online Versatile Visual SLAM

Maxime Ferrera, Alexandre Eudes, Julien Moras, Martial Sanfourche and Guy Le Besnerais

Many applications of Visual SLAM, such as augmented reality, virtual reality, robotics or autonomous driving, require versatile, robust and precise solutions, most often with real-time capability. We propose a fully online algorithm, handling both monocular and stereo camera setups, various map scales and frame-rate ranging from a few Hertz up to several hundreds. It combines numerous recent contributions in visual localization within an efficient multi-threaded architecture.

Many applications of Visual SLAM, such as augmented reality, virtual reality, robotics or autonomous driving, require versatile, robust and precise solutions, most often with real-time capability. We propose a fully online algorithm, handling both monocular and stereo camera setups, various map scales and frame-rate ranging from a few Hertz up to several hundreds. It combines numerous recent contributions in visual localization within an efficient multi-threaded architecture.

[GitHub]