AI

Semi-supervised learning

Semi-supervised Class Incremental Learning

Alexis Lechat, Stéphane Herbin and Frédéric Jurie

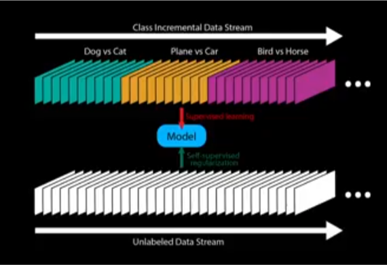

Incremental class learning is a sequential process where batches of samples annotated with new classes are introduced during the learning phase. The main objective of our work is to reduce the so-called “catastrophic forgetting”, i.e. a dramatic drop in classification performance on old classes as new ones are included. We propose to regularize the classifier and give the feature space a more stable structure using non-annotated images in addition to the annotated batches. Indeed, in several applied contexts, such as web image classification or remote sensing data interpretation, large amounts of unlabelled images are available. We demonstrate on two image data sets, MNIST and STL-10, that our approach is able to improve the global performance of classifiers learned using an incremental learning protocol, even with annotated batches of small size.

Incremental class learning is a sequential process where batches of samples annotated with new classes are introduced during the learning phase. The main objective of our work is to reduce the so-called “catastrophic forgetting”, i.e. a dramatic drop in classification performance on old classes as new ones are included. We propose to regularize the classifier and give the feature space a more stable structure using non-annotated images in addition to the annotated batches. Indeed, in several applied contexts, such as web image classification or remote sensing data interpretation, large amounts of unlabelled images are available. We demonstrate on two image data sets, MNIST and STL-10, that our approach is able to improve the global performance of classifiers learned using an incremental learning protocol, even with annotated batches of small size.

[Video]